AI 👾 image captioning - do we have a winner?!

In this article, we try a few free online tools to run image captioning on the same image and compare the results

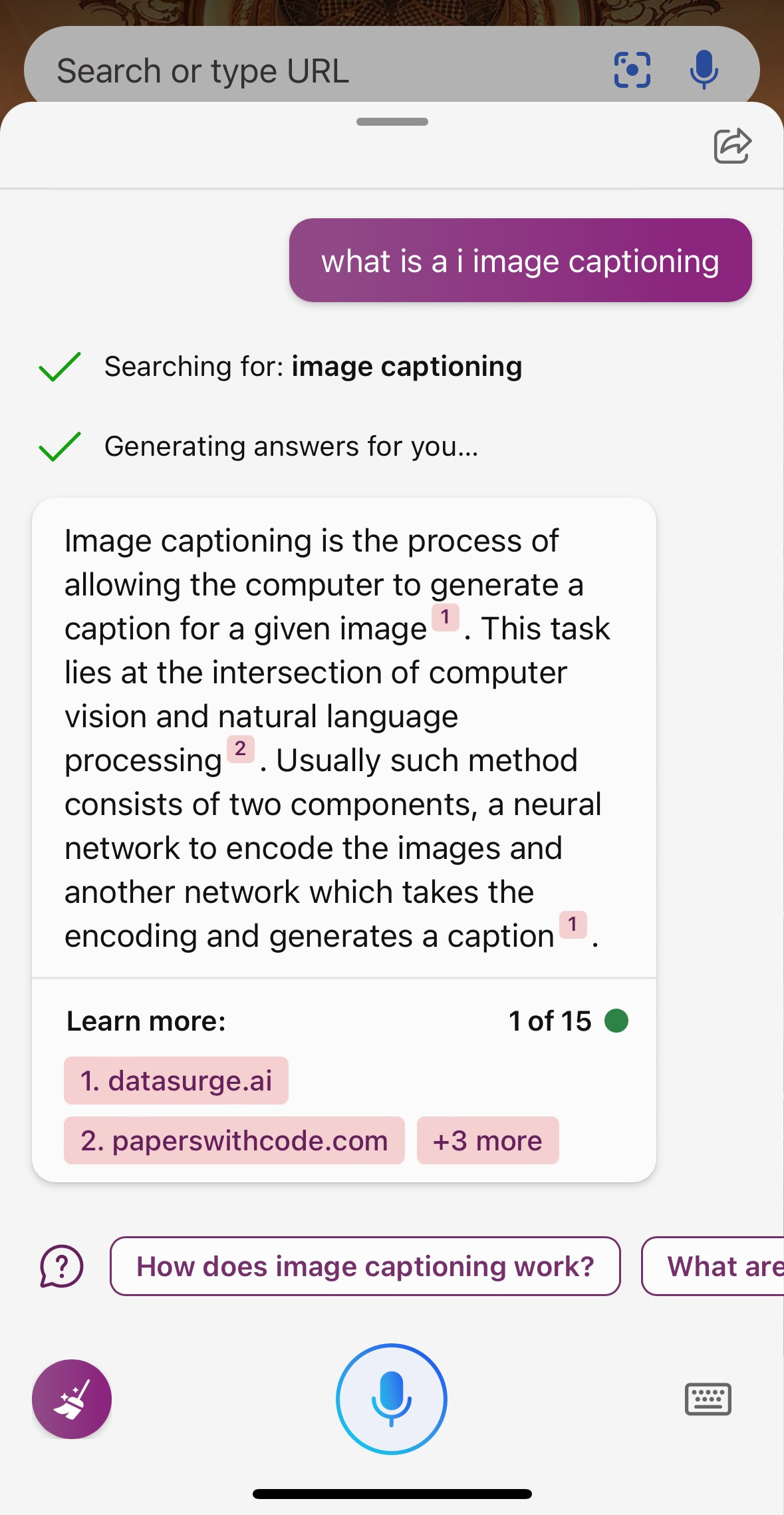

Image captioning - what is it

We're going to let Bing AI help define it for us :)

In other words, given an image, IC would generate a text (caption) that describes it.

The question is: how good is it?

The method

For the simple comparison, we have chosen a single image. In this case:

The original is a 1.4MB 12.5MP JPEG 3072x4080 taken on the Pixel 7 Pro.

Now, let's see how the IC performed!

The contenders for the totally random, single image 👑 are..

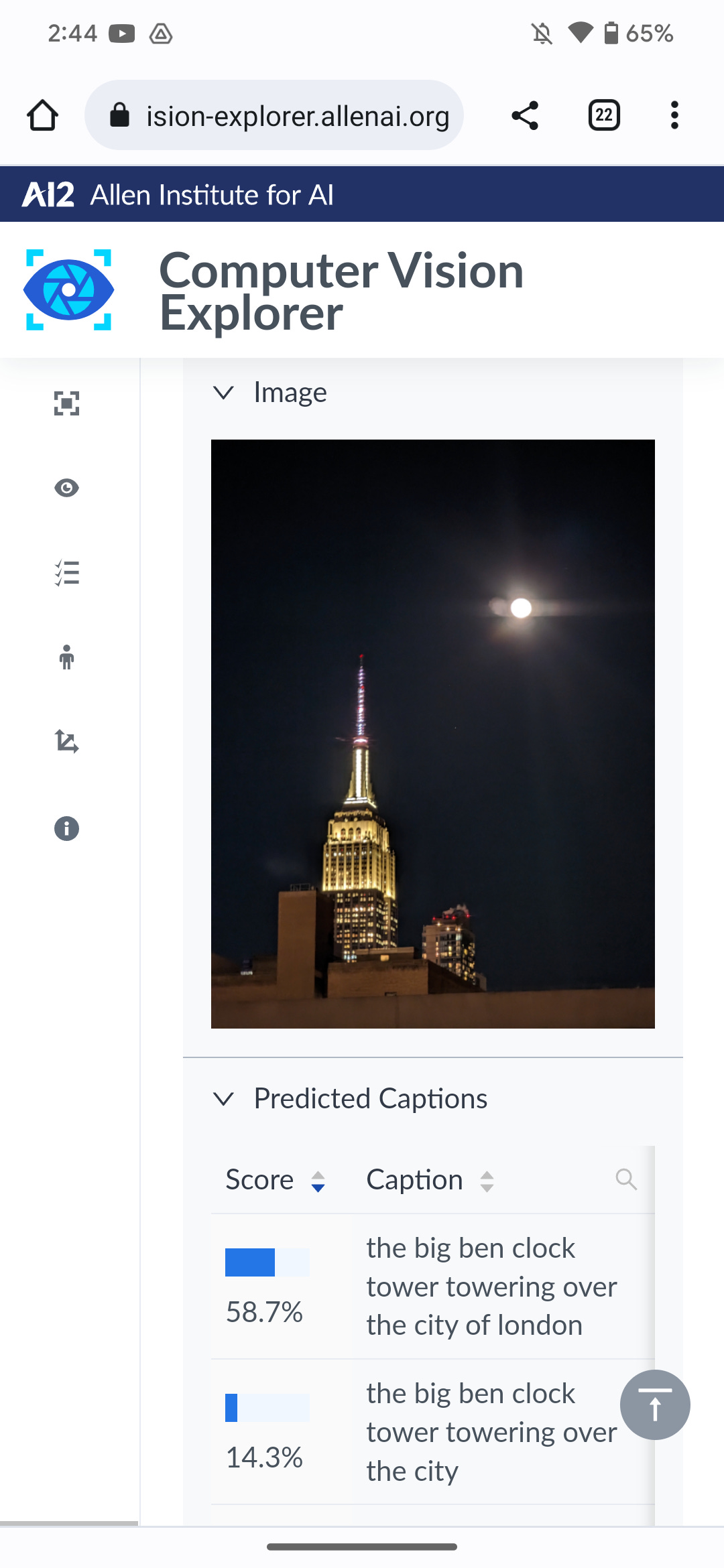

Allen Institute for AI

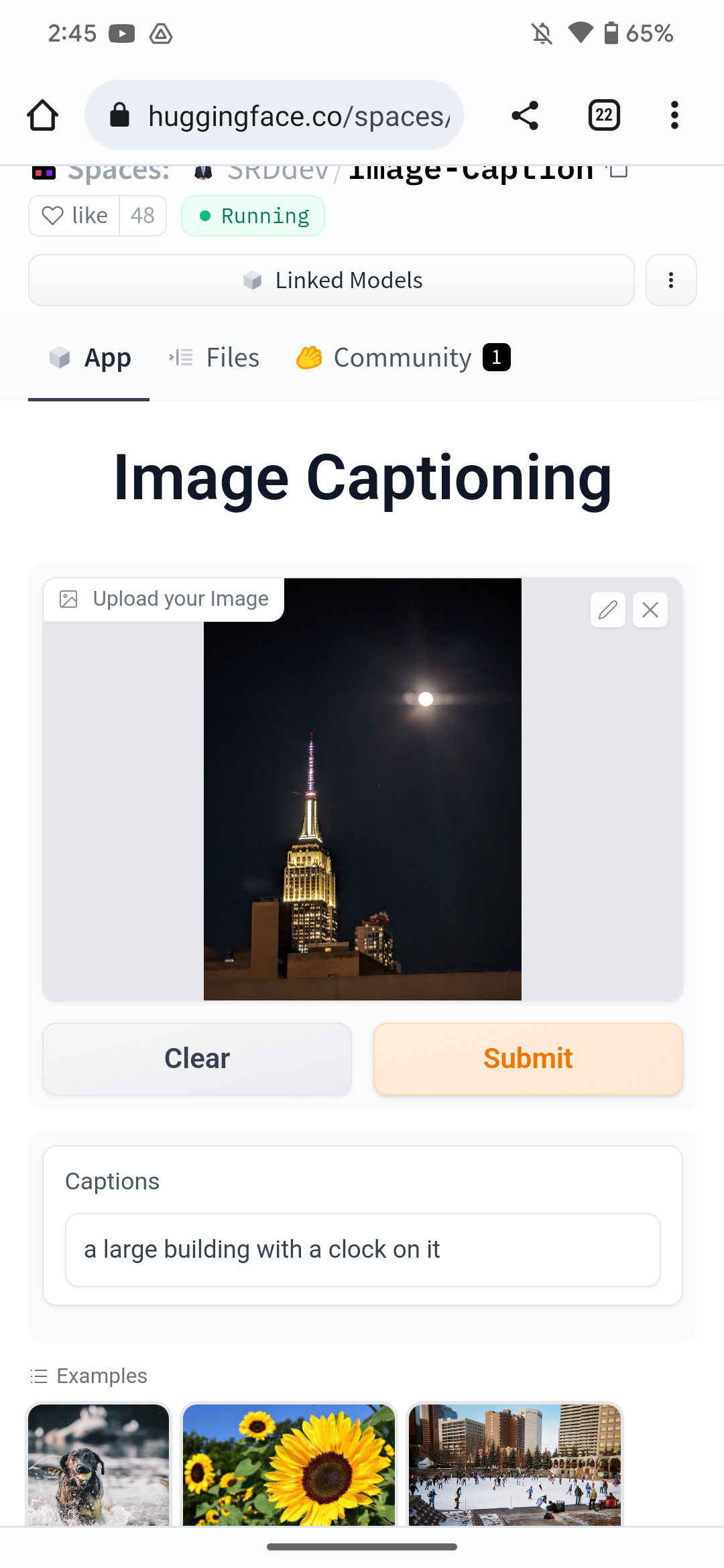

HuggingFace SRDDev/Image-Caption

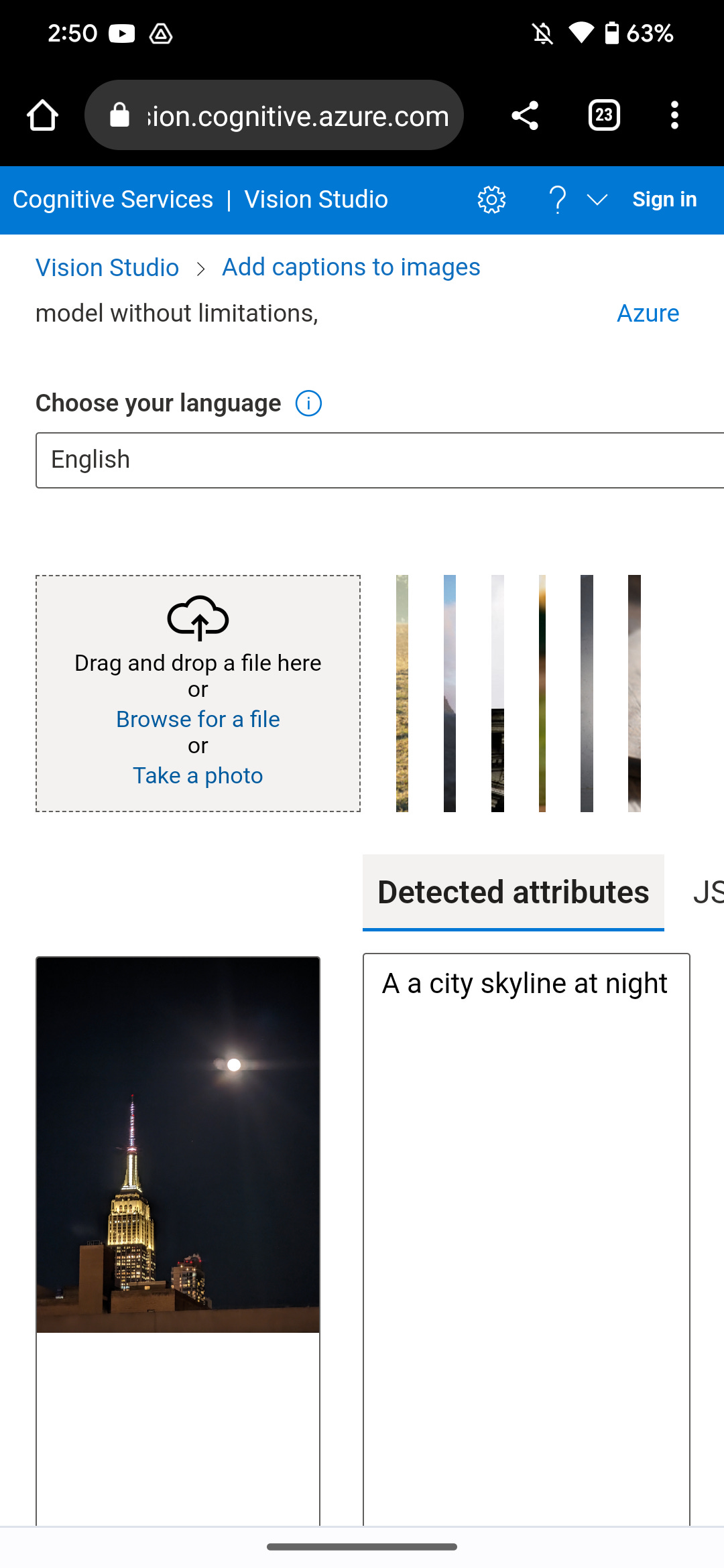

Microsoft Vision Studio

Allen Institute for AI

Well, the result in this case is:

The big ben clock tower towering over the city of london.

Yeah mate, that ain't the Big Ben. And it's the other side of the pond, know what I mean..

Note: the model used in this case is the Bottom-Up & Top-Down (BUTD) Attention (2018)

Next is:

HuggingFace SRDDev/Image-Caption

Details: It seems to be running the nlpconnect/vit-GPT2-image-captioning model underneath. There are 56 spaces on HuggingFace using it as of the time of the publication.

And the result is:

A large building with a clock on it.

Getting closer! But there ain't no clock on the building. Are you alluding to the Big Ben ;)?

Last but not least:

Microsoft Vision Studio - Azure Cognitive Services

A city skyline at night

Well, this … Isn't wrong.

So clearly, we have a winner.

Sidenote: it is unclear what models / datasets are being using under the hood.

Final thoughts

This little exercise is by no means a scientific or an exhaustive comparison of the available IC methods /models / datasets out there.

It is, however, interesting to see that all 3 tools have totally missed/ignored the moon and none of them have identified the Empire State Building from this angle.

We are looking forward to run this example on newer models and datasets. Stay tuned!